I’m interested in using pandoc to turn my markdown notes on Japanese into nicely set HTML and (Xe)LaTeX. With HTML5, ruby (typically used to phonetically read chinese characters by placing text above or to the side) is standard, and support from browsers is emerging (Webkit based browsers appear to fully support it). To convert it to HTML, use this command: pandoc test1.md -f markdown -t html -s -o test1.html The filename test1.md tells pandoc which file to convert. The -s option says to create a “standalone” file, with a header and footer, not just a fragment. I'm trying to convert latex code embedded in an HTML document (Intended to be used with a Javascript shim) into MathML. Pandoc seems like a great tool. Following this example: http://pandoc.org/demos.

- Convert pdf to latex free download. TeXworks TeXworks is a free and simple working environment for authoring TeX (LaTeX, ConTeXt and XeTeX) docum.

- Feb 11, 2015 Your example worked for latex/pdf output because pandoc passes through raw tex to these formats (but not to HTML). Labels and references don't work with pandoc math. It occurs to me that it might make sense to pass through raw latex environments to HTML in the special case where -mathjax is used. This would solve your problem nicely.

Pandoc is the swiss-army knife for converting files from one markup format into another:

What does Pandoc do?

Pandoc can convert documents from

- markdown, reStructuredText, textile, HTML, DocBook, LaTeX, MediaWiki markup, TWiki markup, OPML, Emacs Org-Mode, Txt2Tags, Microsoft Word docx, EPUB, or Haddock markup

to

- HTML formats: XHTML, HTML5, and HTML slide shows using Slidy, reveal.js, Slideous, S5, or DZSlides.

- Word processor formats: Microsoft Word docx, OpenOffice/LibreOffice ODT, OpenDocument XML

- Ebooks: EPUB version 2 or 3, FictionBook2

- Documentation formats: DocBook, GNU TexInfo, Groff man pages, Haddock markup

- Page layout formats: InDesign ICML

- Outline formats: OPML

- TeX formats: LaTeX, ConTeXt, LaTeX Beamer slides

- PDF via LaTeX

- Lightweight markup formats: Markdown, reStructuredText, AsciiDoc, MediaWiki markup, DokuWiki markup, Emacs Org-Mode, Textile

What does Pandoc do for me?

I use pandoc to convert documents from

- markdown

to

- HTML

- Microsoft Word docx (force majeure!), OpenOffice/LibreOffice ODT, OpenDocument XML

- LaTeX Beamer slides

- PDF via LaTeX

What does Pandoc do better than the specialized tools?

Accessibility:

Code in markdown is easily readable text.In comparison:

Markdownsyntax is handier than(La)TeXsyntax (Donald Knuth, inventor ofTeX, wondered why it took so long to evolve fromLaTeXto a more efficient markup language that compiles down toTeX, such asmarkdown),- in particular

Markdownsyntax is handier thanLaTeX Beamersyntax, - math formulas are more easily written in

Markdownthan inMicrosft WordorLibreOffice, - it is especially suited for creating short

HTMLarticles, such as blog entries.

What does Pandoc do worse than the specialized tools?

- Functions specific to a markup language

- either cannot be used,

- or can be used, but may turn compilation into other languages invalid. (The

pandocsyntax is as reduced as the common base among all markup languages into which it converts.)

- For more complex documents, there is certain consensus that the similar

asciidocformat is more powerful and better suited (publisher’s choice). - Pandoc is still in development:

- the output sometimes rough and needs to be retouched,

- documentation is incomplete,

- smaller ecosystem of tools, like editors and IDEs, for example:

LaTeXsupports forward and inverse document search, that lets you jump from a position in the sourceTeXfile to the corresponding position in the compiledpdffile, and the other way around. There is no such thing for markdown: markdown first compiles toTeXand then topdf.- The

Vimplugin formarkdownis young and basic in comparison to that forLaTeXwhich is stable and powerful.

Markdown is simple, concise and intuitive:Its cheat-sheet and documentation are one.

Examples:

Source:

Output:

An emphasized itemization:

- dog

- fox

A bold enumeration:

- Mum

- Dad

A table

| mum | dad | |

|---|---|---|

| weight | 100 kg | 200 kg |

| height | 1,20 m | 2,10 m |

We use

- a

Makefile, that sets a couple of compilation options, and - a main markdown file, that sets a couple of document options.

Which parameters can be set by the command line, and which in the document, this choice is somewhat arbitrary and perhaps a shadow of pandoc’s unfinished state.

Pandoc parameters

We can pass many options to pandoc, among those the most important ones (for us) are:

Makefile

By a makefile, instead of having to pass the options for

- compilation,

- running,

- checking and cleaning,

each time on the command line, we call make (run/check/clean) and use those once and for all set in the makefile.

The command make, corresponding to the entry all:, generates the output file, in our case the pdf document.For example,

make docxgenerates adocxdocument,make htmlgenerates aHTMLdocument,make latexgenerates aTeXdocument,

The option all: latex pdf is the default option, that is,

makegenerates first aTeXand then apdfdocument.

We recommend latexrun as a good LaTeX “debugger”.Still, note that we first have to spot first the error in the TeX, then the corresponding one in the markdown document.

The command run displays the output file, for example,

make run-htmlshows theHTMLdocument in a browser (such asFirefox),make run-odtshows theODTdocument in LibreOffice,

The option run is the default option, that is,

make rundisplays thepdfdocument in a pdf-viewer (such as zathura).

Finally, make clean removes all output files.

Main file

This file sets at the top the title, author and date of the document.Below, additional options,

- one general option,

langthat controls for example the labeling of the table of content and references, and various TeX options, such as:

- document type,

- font size, and

- depth of the section numbering.

Let us facilitate compilation and editing of pandoc files, the first by built-in functionality, latter by dedicated plugins.

Automatic compilation and reload

To make Vim compile our file after every save, add to the (newly created, if necessary) file ~/.vim/after/ftplugin/markdown.vim the line:

If the output is

pdf(via TeX), then the pdf viewerzathuraautomatically reloads the changed pdf file,html, then theFirefoxpluginautoreloadautomatically reloads the changed html file.

Editing enhancements

The plugin vim-pandoc

- completes references in your library when hitting the ` < Tab > ` key.

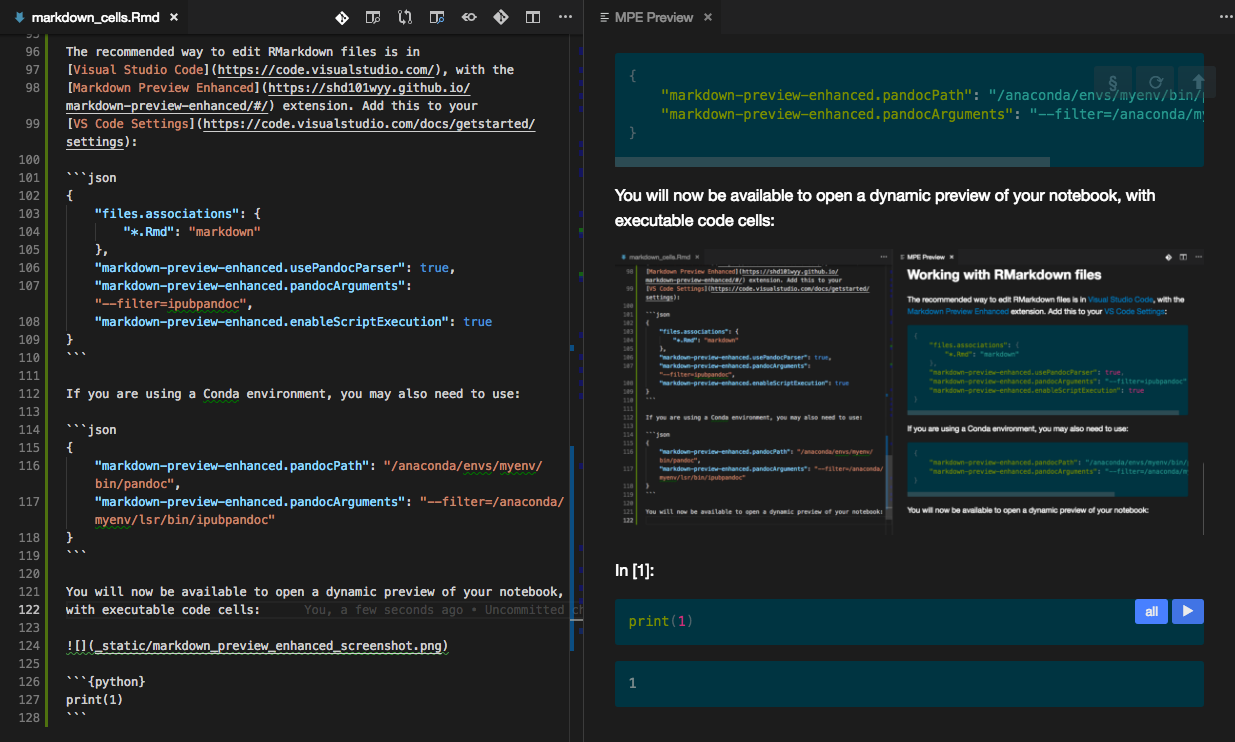

- folds sections and code,

- gives a Table of Contents.

The plugin UltiSnips expands lengthier markdown syntax such as

[to[link](http://url 'title'), and

The plugin vim-template prefills, on editing

- a new

makefile, the makefile with the above boilerplatemakefilecode, and - a new

pandocfile, thepandocfile with the above boilerplate main file code.

- Technical details of JSON filters

Pandoc provides an interface for users to write programs (known as filters) which act on pandoc’s AST.

Pandoc consists of a set of readers and writers. When converting a document from one format to another, text is parsed by a reader into pandoc’s intermediate representation of the document—an “abstract syntax tree” or AST—which is then converted by the writer into the target format. The pandoc AST format is defined in the module Text.Pandoc.Definition in the pandoc-types package.

A “filter” is a program that modifies the AST, between the reader and the writer.

Pandoc supports two kinds of filters:

Lua filters use the Lua language to define transformations on the pandoc AST. They are described in a separate document.

JSON filters, described here, are pipes that read from standard input and write to standard output, consuming and producing a JSON representation of the pandoc AST:

Lua filters have a couple of advantages. They use a Lua interpreter that is embedded in pandoc, so you don’t need to have any external software installed. And they are usually faster than JSON filters. But if you wish to write your filter in a language other than Lua, you may prefer to use a JSON filter. JSON filters may be written in any programming language.

You can use a JSON filter directly in a pipeline:

But it is more convenient to use the --filter option, which handles the plumbing automatically:

For a gentle introduction into writing your own filters, continue this guide. There’s also a list of third party filters on the wiki.

Suppose you wanted to replace all level 2+ headings in a markdown document with regular paragraphs, with text in italics. How would you go about doing this?

A first thought would be to use regular expressions. Something like this:

Pandoc Latex To Html Free

This should work most of the time. But don’t forget that ATX style headings can end with a sequence of #s that is not part of the heading text:

And what if your document contains a line starting with ## in an HTML comment or delimited code block?

We don’t want to touch these lines. Moreover, what about Setext style second-level heading?

We need to handle those too. Finally, can we be sure that adding asterisks to each side of our string will put it in italics? What if the string already contains asterisks around it? Then we’ll end up with bold text, which is not what we want. And what if it contains a regular unescaped asterisk?

How would you modify your regular expression to handle these cases? It would be hairy, to say the least.

A better approach is to let pandoc handle the parsing, and then modify the AST before the document is written. For this, we can use a filter.

To see what sort of AST is produced when pandoc parses our text, we can use pandoc’s native output format:

A Pandoc document consists of a Meta block (containing metadata like title, authors, and date) and a list of Block elements. In this case, we have two Blocks, a Header and a Para. Each has as its content a list of Inline elements. For more details on the pandoc AST, see the haddock documentation for Text.Pandoc.Definition.

We can use Haskell to create a JSON filter that transforms this AST, replacing each Header block with level >= 2 with a Para with its contents wrapped inside an Emph inline:

The toJSONFilter function does two things. First, it lifts the behead function (which maps Block -> Block) onto a transformation of the entire Pandoc AST, walking the AST and transforming each block. Second, it wraps this Pandoc -> Pandoc transformation with the necessary JSON serialization and deserialization, producing an executable that consumes JSON from stdin and produces JSON to stdout.

To use the filter, make it executable:

Pandoc Latex To Html Free

and then

(It is also necessary that pandoc-types be installed in the local package repository. To do this using cabal-install, cabal v2-update && cabal v2-install --lib pandoc-types.)

Alternatively, we could compile the filter:

Note that if the filter is placed in the system PATH, then the initial ./ is not needed. Note also that the command line can include multiple instances of --filter: the filters will be applied in sequence.

Another easy example. WordPress blogs require a special format for LaTeX math. Instead of $e=mc^2$, you need: $LaTeX e=mc^2$. How can we convert a markdown document accordingly?

Again, it’s difficult to do the job reliably with regexes. A $ might be a regular currency indicator, or it might occur in a comment or code block or inline code span. We just want to find the $s that begin LaTeX math. If only we had a parser…

We do. Pandoc already extracts LaTeX math, so:

Mission accomplished. (I’ve omitted type signatures here, just to show it can be done.)

Pandoc Latex To Html Tikz

While it’s easiest to write pandoc filters in Haskell, it is fairly easy to write them in python using the pandocfilters package. The package is in PyPI and can be installed using pip install pandocfilters or easy_install pandocfilters.

Here’s our “beheading” filter in python:

toJSONFilter(behead) walks the AST and applies the behead action to each element. If behead returns nothing, the node is unchanged; if it returns an object, the node is replaced; if it returns a list, the new list is spliced in.

Note that, although these parameters are not used in this example, format provides access to the target format, and meta provides access to the document’s metadata.

There are many examples of python filters in the pandocfilters repository.

For a more Pythonic alternative to pandocfilters, see the panflute library. Don’t like Python? There are also ports of pandocfilters in

- PHP,

- perl,

- TypeScript/JavaScript via Node.js

- pandoc-filter,

- node-pandoc-filter,

- Groovy, and

- Ruby.

Starting with pandoc 2.0, pandoc includes built-in support for writing filters in lua. The lua interpreter is built in to pandoc, so a lua filter does not require any additional software to run. See the documentation on lua filters.

So none of our transforms have involved IO. How about a script that reads a markdown document, finds all the inline code blocks with attribute include, and replaces their contents with the contents of the file given?

Try this on the following:

What if we want to remove every link from a document, retaining the link’s text?

Note that delink can’t be a function of type Inline -> Inline, because the thing we want to replace the link with is not a single Inline element, but a list of them. So we make delink a function from an Inline element to a list of Inline elements. toJSONFilter can still lift this function to a transformation of type Pandoc -> Pandoc.

Finally, here’s a nice real-world example, developed on the pandoc-discuss list. Qubyte wrote:

I’m interested in using pandoc to turn my markdown notes on Japanese into nicely set HTML and (Xe)LaTeX. With HTML5, ruby (typically used to phonetically read chinese characters by placing text above or to the side) is standard, and support from browsers is emerging (Webkit based browsers appear to fully support it). For those browsers that don’t support it yet (notably Firefox) the feature falls back in a nice way by placing the phonetic reading inside brackets to the side of each Chinese character, which is suitable for other output formats too. As for (Xe)LaTeX, ruby is not an issue.

At the moment, I use inline HTML to achieve the result when the conversion is to HTML, but it’s ugly and uses a lot of keystrokes, for example

sets ご飯 “gohan” with “han” spelt phonetically above the second character, or to the right of it in brackets if the browser does not support ruby. I’d like to have something more like

or any keystroke saving convention would be welcome.

We came up with the following script, which uses the convention that a markdown link with a URL beginning with a hyphen is interpreted as ruby:

Note that, when a script is called using --filter, pandoc passes it the target format as the first argument. When a function’s first argument is of type Maybe Format, toJSONFilter will automatically assign it Just the target format or Nothing.

We compile our script:

Then run it:

Note: to use this to generate PDFs via LaTeX, you’ll need to use --pdf-engine=xelatex, specify a mainfont that has the Japanese characters (e.g. “Noto Sans CJK TC”), and add usepackage{ruby} to your template or header-includes.

Put all the regular text in a markdown document in ALL CAPS (without touching text in URLs or link titles).

Remove all horizontal rules from a document.

Renumber all enumerated lists with roman numerals.

Replace each delimited code block with class

dotwith an image generated by runningdot -Tpng(from graphviz) on the contents of the code block.Find all code blocks with class

pythonand run them using the python interpreter, printing the results to the console.

Tex4ht

A JSON filter is any program which can consume and produce a valid pandoc JSON document representation. This section describes the technical details surrounding the invocation of filters.

Arguments

The program will always be called with the target format as the only argument. A pandoc invocation like

will cause pandoc to call the program demo with argument html.

Environment variables

Pandoc sets additional environment variables before calling a filter.

PANDOC_VERSION2.11.1.PANDOC_READER_OPTIONSJSON object representation of the options passed to the input parser.

Object fields:

readerAbbreviations- set of known abbreviations (array of strings).

readerColumns- number of columns in terminal; an integer.

readerDefaultImageExtension- default extension for images; a string.

readerExtensions- integer representation of the syntax extensions bit field.

readerIndentedCodeClasses- default classes for indented code blocks; array of strings.

readerStandalone- whether the input was a standalone document with header; either

trueorfalse. readerStripComments- HTML comments are stripped instead of parsed as raw HTML; either

trueorfalse. readerTabStop- width (i.e. equivalent number of spaces) of tab stops; integer.

readerTrackChanges- track changes setting for docx; one of

'accept-changes','reject-changes', and'all-changes'.

Supported interpreters

Files passed to the --filter/-F parameter are expected to be executable. However, if the executable bit is not set, then pandoc tries to guess a suitable interpreter from the file extension.

| file extension | interpreter |

|---|---|

| .py | python |

| .hs | runhaskell |

| .pl | perl |

| .rb | ruby |

| .php | php |

| .js | node |

| .r | Rscript |